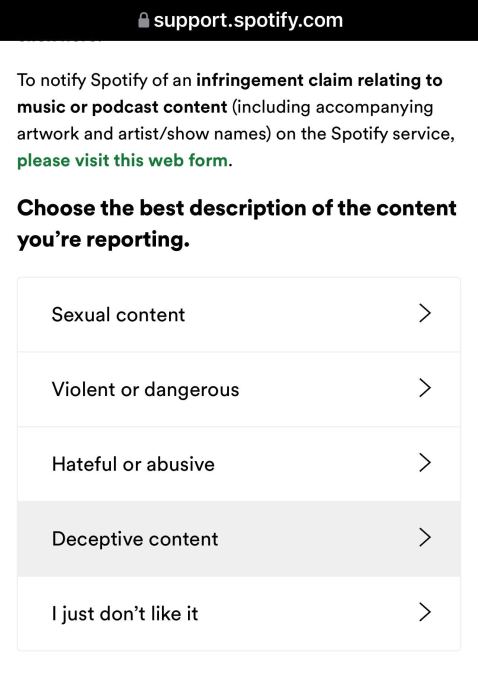

A number of Spotify playlist curators are complaining that the streaming music company is not addressing the ongoing issue of playlist abuse, which sees bad actors reporting playlists that have gained a following in order to give their own playlists better visibility. Currently, playlists created by Spotify users can be reported in the app for a variety of reasons — like sexual, violent, dangerous, deceptive, or hateful content, among other things. When a report is submitted, the playlist in question will have its metadata immediately removed, including its title, description, and custom image. There is no internal review process that verifies the report is legitimate before the metadata is removed.

Bad actors have learned how to abuse this system to give themselves an advantage. If they see a rival playlist has more users than their own, they will report their competitors in hopes of giving their playlist a more prominent ranking in search results.

According to the curators affected by this problem, there is no limit to the number of reports these bad actors can submit, either. The curators complain that their playlists are being reported daily, and often multiple times per day.

The problem is not new. Users have been complaining about playlist abuse for years. A thread on Spotify’s community forum about this problem is now some 30 pages deep, in fact, and has accumulated over 330 votes. Victims of this type of harassment have also repeatedly posted to social media about Spotify’s broken system to raise awareness of the problem more publicly. For example, one curator last year noted their playlist had been reported over 2,000 times, and said they were getting a new email about the reports nearly every minute. That’s a common problem and one that seems to indicate bad actors are leveraging bots to submit their reports.

Many curators say they’ve repeatedly reached out to Spotify for help with this issue and were given no assistance.

Curators can only reply to the report emails from Spotify to appeal the takedown, but they don’t always receive a response. When they ask Spotify for help with this issue, the company only says that it’s working on a solution.

While Spotify may suspend the account that abused the system when a report is deemed false, the bad actors simply create new accounts to continue the abuse. Curators on Spotify’s community forums suggested that an easy fix to the bot-driven abuse would be to restrict accounts from being able to report playlists until their accounts had accumulated 10 hours of streaming music or podcasts. This could help to ensure they were a real person before they gained permission to report abuse.

One curator, who maintains hundreds of playlists, said the problem had gotten so bad that they created an iOS app to continually monitor their playlists for this sort of abuse and to reinstate any metadata once a takedown was detected. Another has written code to monitor for report emails, and uses the Spotify API to automatically fix their metadata after the false reports. But not all curators have the ability to build an app or script of their own to deal with this situation.

Image Credits: Spotify (screenshot of reporting flow)

TechCrunch asked Spotify what it planned to do about this problem, but the company declined to provide specific details.

“As a matter of practice, we will continue to disable accounts that we suspect are abusing our reporting tool. We are also actively working to enhance our processes to handle any suspected abusive reports,” a Spotify spokesperson told us.

The company said it is currently testing several different improvements to the process to curb the abuse, but would not say what those tests may include, or whether tests were internal or external. It could not provide any ballpark sense of when its reporting system would be updated with these fixes, either. When pressed, the company said it doesn’t share details about specific security measures publicly as a rule, as doing so could make abuse of its systems more effective.

Often, playlists are curated by independent artists and labels who are looking to promote themselves and get their music discovered, only to have their work taken down immediately, without any sort of review process that could sort legitimate reports from bot-driven abuse.

Curators complain that Spotify has been dismissing their cries for help for far too long, and Spotify’s vague and non-committal response about a coming solution only validates those complaints further.

So many curators and artists are suffering because of constant, groundless playlist reports on

So many curators and artists are suffering because of constant, groundless playlist reports on