Facebook has been steadily rolling out updates to Facebook Groups aimed at giving admins better ways to manage and moderate their online communities. Recently, this has included a combination of product releases — like access to automated moderation aids and alerts about heated debates — as well as new policies aimed at keeping Groups in check. Today, Facebook says it’s introducing two other changes. It will now enforce stricter measures against Group members who break its rules and it will make some of its removals more transparent, with a new “Flagged by Facebook” feature.

Specifically, Facebook says it will begin to demote all Groups content from members who have broken Facebook’s Community Standards anywhere across its platform. In other words, bad actors on Facebook may see the content they share in Groups downranked, even if they haven’t violated any of that Groups’ rules and policies.

By “demoting,” Facebook means it will show the content shared by these members lower in the News Feed. This is also called downranking and is an enforcement measure Facebook has used in the past to penalize content it wanted less of in News Feed — like clickbait, spam or even posts from news organizations.

In addition, Facebook says these demotions will get more severe as the members accrue more violations across Facebook. Because Facebook algorithms rank content in News Feed in a way that’s personalized to users, it could be difficult to track how well such demotions may or may not be working going forward.

Facebook also tells us the demotions currently only apply to the main News Feed, not the dedicated feed in the Groups tab where you can browse posts from your various Groups in one spot.

The company hopes this change will reduce members’ ability to reach others and notes it joins existing Groups penalties for rule violations that include restricting users’ ability to post, comment, add new members to a group or create new groups.

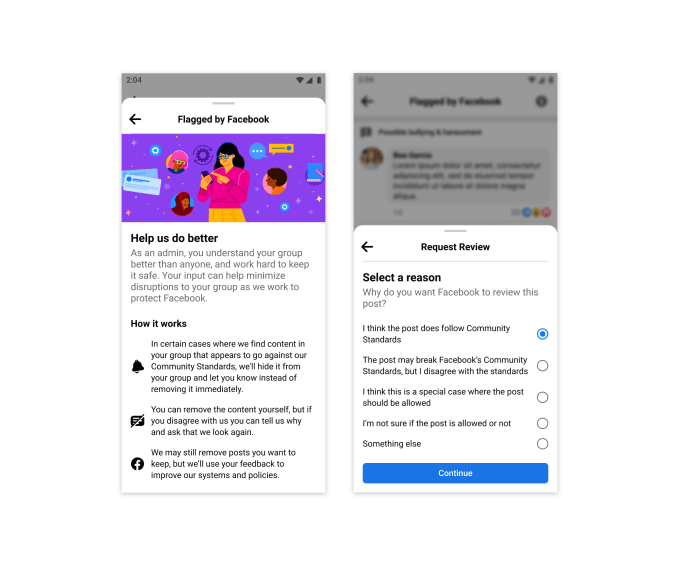

Another change is the launch of a new feature called “Flagged by Facebook.”

Image Credits: Facebook

This feature will show Group admins which content has been flagged for removal before it’s shown to their broader community. Admins can then choose to remove the content themselves or review the content to see if they agree with Facebook’s decision. If not, they can ask for a review by Facebook and provide additional feedback as to why they believe the content should remain. This could be helpful in the case of automated moderation errors. By allowing admins to step in and request reviews, they could potentially protect members ahead of an unnecessary strike and removal.

The feature joins an existing option to appeal a takedown to the Group admins, when a post is found to violate Community Standards. Instead, it’s about giving admins a way to be more proactively involved in the process.

Unfortunately for Facebook, systems like this only work when Groups are actively moderated. That’s not always the case. Though Groups may have admins assigned, if they decide to stop using Facebook or managing the Group but don’t add another admin or moderator to take over, their Group can plunge into chaos — particularly if it’s larger. One group member of a sizable group with over 40,000 members told us their admin had not been active in the group since 2017. The members know this and some will take advantage of the lack of moderation to post anything they want at times.

This is just one example of how Facebook’s Groups infrastructure is still largely a work-in-progress. If a company was building a platform for private groups from scratch, policies and procedures — like how content removals work, or the penalty for rule-breaking, for instance — wouldn’t likely be years-later additions. They would be foundational elements. And yet, Facebook is just now rolling out what ought to have been established protocols for a product that arrived in 2010.